Trace and monitor AI agents and applications in production to detect anomalies, debug issues, and drive continuous improvement.

.png)

Compute safety, quality, and performance metrics across your data to detect agent failures in production.

Capture user feedback to track performance and user experience across your AI applications.

Visualize complex agentic workflows as DAGs to understand and debug critical error cascades.

Save custom charts to your team workspace for quick access to insights that matter to you the most.

Slice and dice your data across segments and get detailed insights into application performance.

Log application data synchronously and asynchronously, using our OpenTelemetry-native SDK.

LLMs often lead to unexpected failures in production. HoneyHive allows you to monitor your LLM apps with quantitative rigor and get actionable insights to continuously improve your app.

Log LLM application data with just a few lines of code

Enrich logs with user feedback, metadata, and user properties

Query logs and save custom charts in your team dashboard

.png)

LLM apps fail due to issues in either the prompt, model, or your data retrieval pipeline. With full visibility into the entire chain of events, you can quickly pinpoint errors and iterate with confidence.

Debug chains, agents, tools and RAG pipelines

Root cause errors with AI-assisted RCA

Integrates with leading orchestration frameworks

.png)

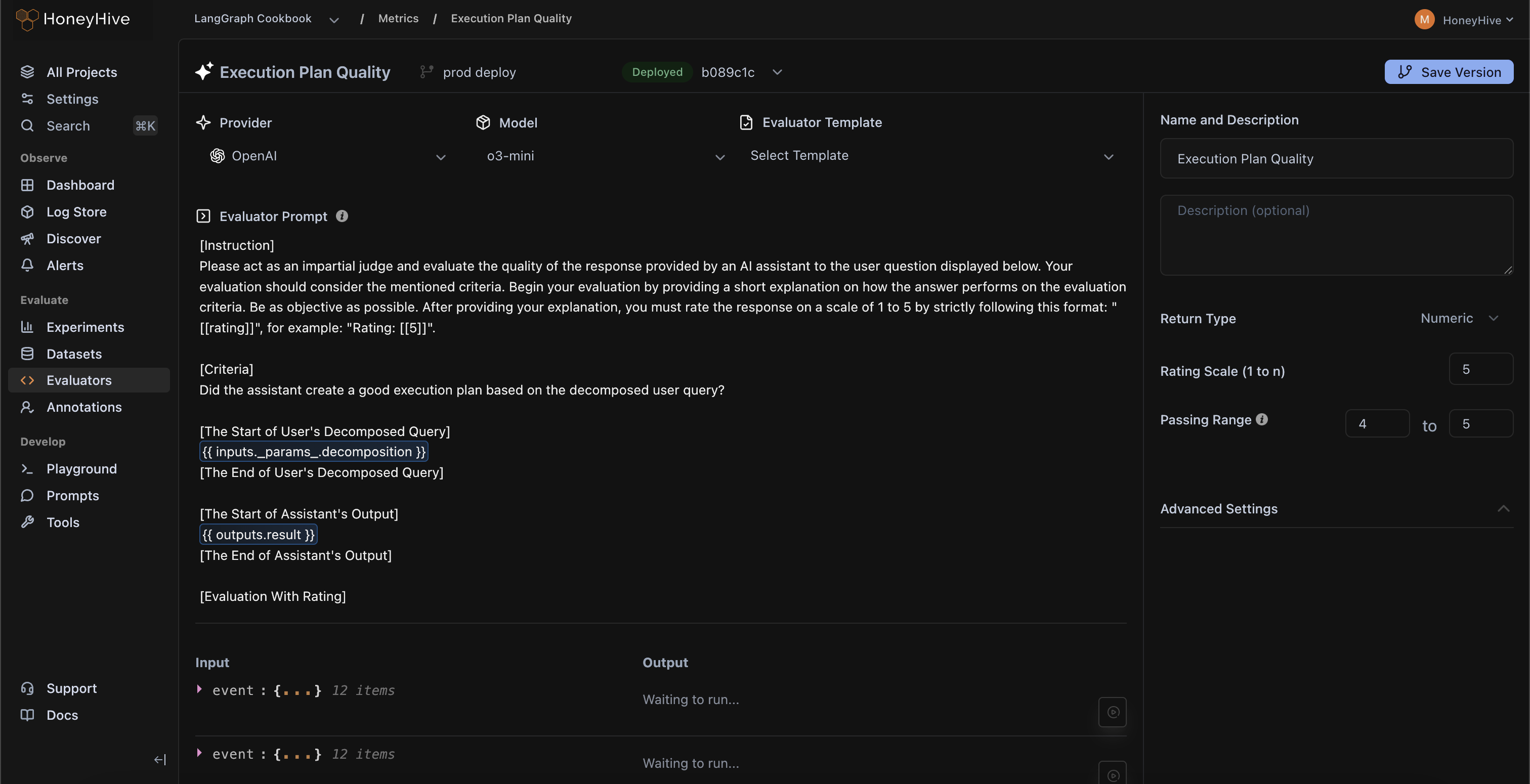

Run online evaluators on your live production data to catch LLM failures automatically.

.png)

Evaluate faithfulness and context relevance across RAG pipelines

.png)

Write assertions to validate JSON structures or SQL schemas

.png)

Implement moderation filters to detect PII leakage and unsafe responses

.png)

Catch agentic failures like tool misuse or looping

.png)

Calculate NLP metrics such as ROUGE-L or Edit Distance

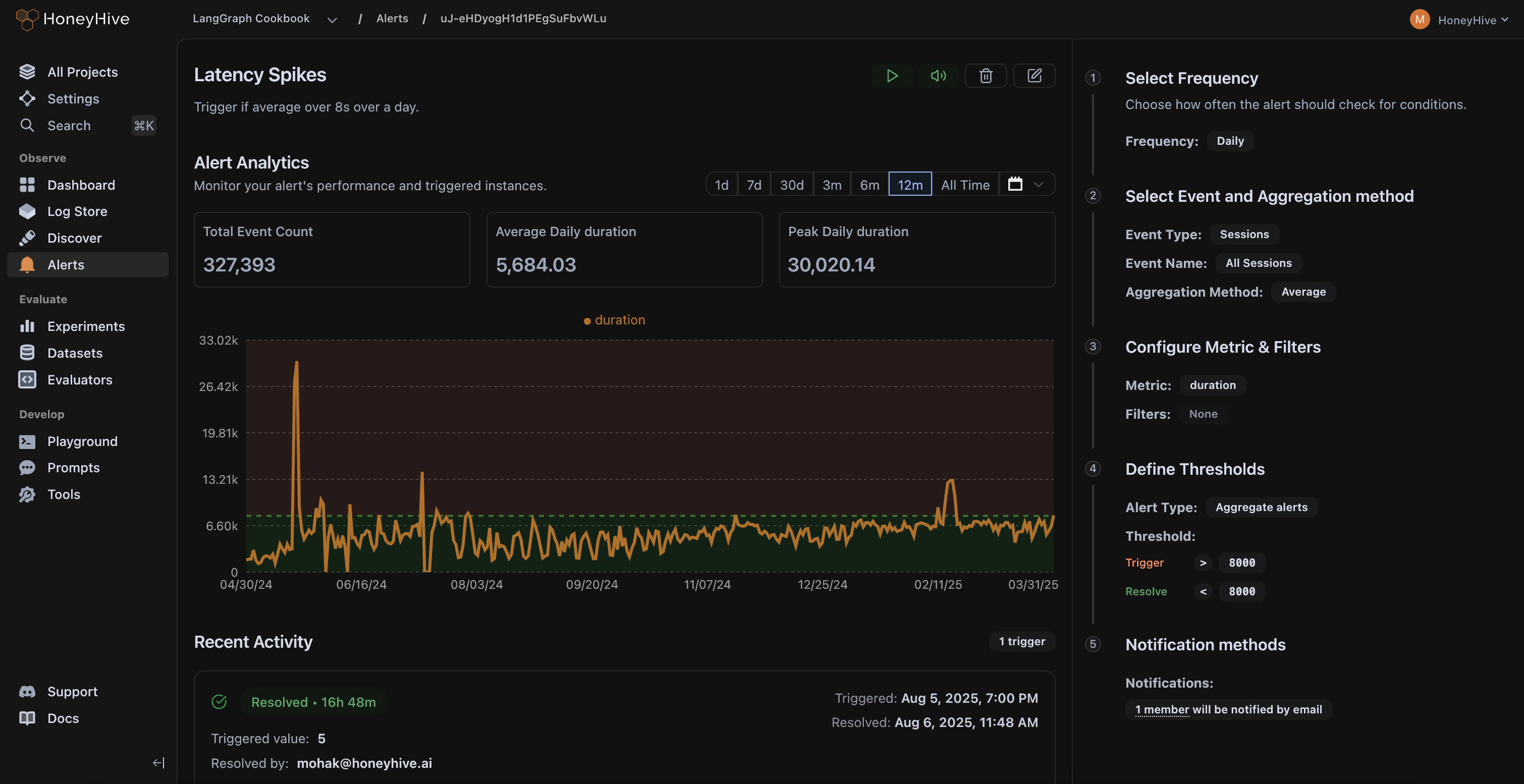

HoneyHive enables you to set up targeted alerts on any schema property to track critical incidents, and run automations to triage and root-cause issues.

Get alerts on cost, latency, accuracy, or guardrail violations

Escalate failing traces to domain experts for human review

Curate datasets from failing traces for future evaluations and resolutions