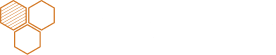

Evaluate AI agents & application to measure performance, catch regressions, simulate tricky scenarios, and ship to production with confidence.

Define your own code or LLM evaluators to automatically test your AI pipelines against your custom criteria, or define human evaluation fields to manually grade outputs.

Evaluation runs can be logged programmatically and integrated into your CI/CD workflows via our SDK, allowing you to check for regressions.

Get detailed visibility into your entire LLM pipeline across your run, helping you pinpoint sources of regressions in your pipeline as you run experiments.

Save, version, and compare evaluation runs to create a single source of truth for all experiments and artifacts, accessible to your entire team.

Capture underperforming test cases from production and add corrections to curate golden datasets for continuous testing.

We automatically parallelize requests and metric computation to speed up large evaluation runs spanning thousands of test cases.

HoneyHive enables you to test AI applications just like you test traditional software, eliminating guesswork and manual effort.

Evaluate prompts, agents, or retrieval strategies programmatically

Invite domain experts to provide human feedback

Collaborate and share learnings with your team

LLM apps fail due to issues in either the prompt, model, or your data retrieval pipeline. With full visibility into the entire chain of events, you can quickly pinpoint errors and iterate with confidence.

Debug chains, agents, tools and RAG pipelines

Root cause errors with AI-assisted RCA

Integrates with leading orchestration frameworks

.png)

HoneyHive enables you to filter and label underperforming data from production to curate "golden" evaluation datasets to test and evaluate your application.

Curate datasets from production, or synthetically generate using AI

Invite domain experts to annotate and provide ground truth labels

Manage and version evaluation datasets across your project

.png)

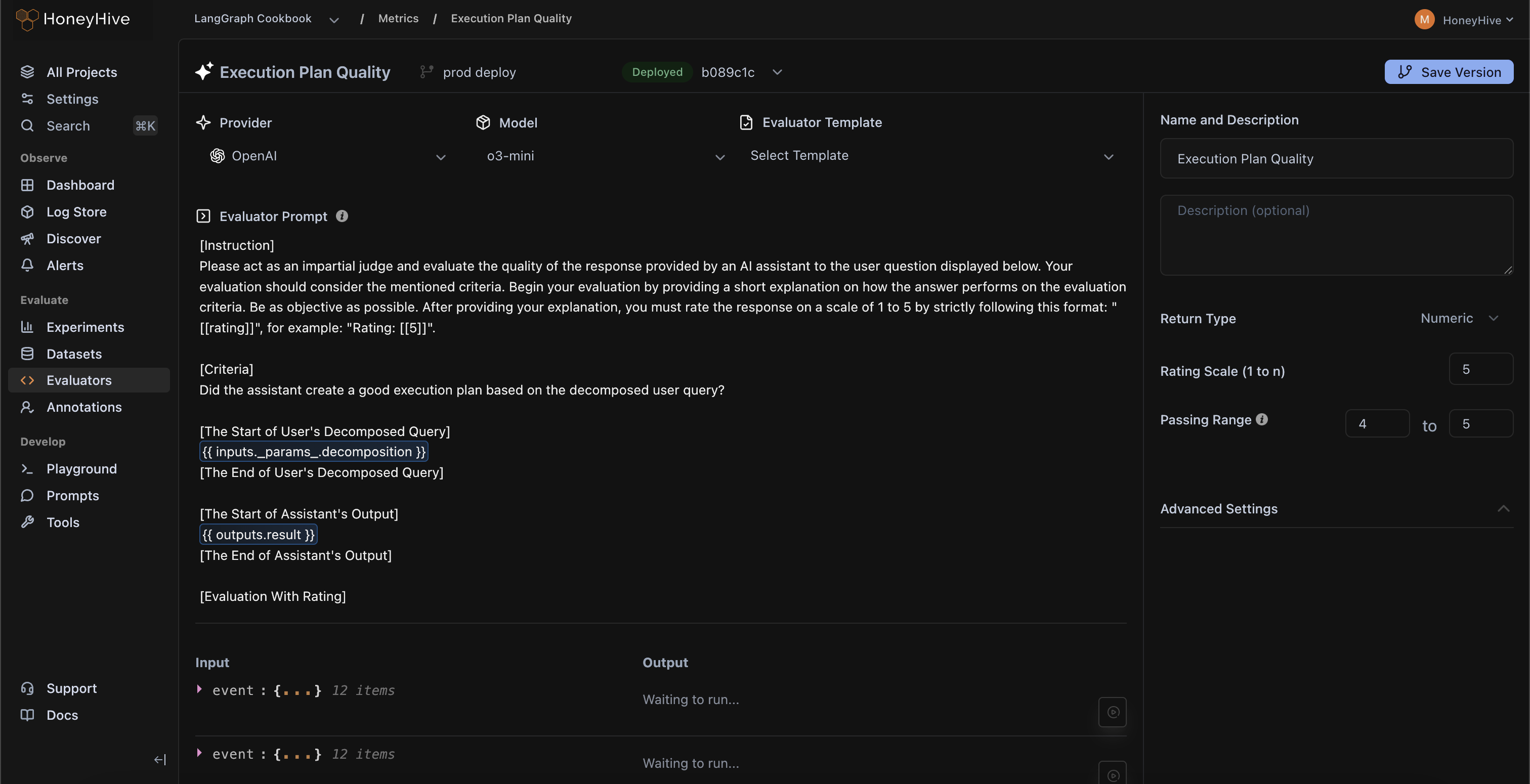

Every use-case is unique. HoneyHive allows you to build your own LLM evaluators and validate them within the evaluator console.

.png)

Test faithfulness and context relevance across RAG pipelines

.png)

Write assertions to validate JSON structures or find keywords

.png)

Implement custom moderation filters to detect unsafe responses

.png)

Use LLMs to critique agent trajectory over multiple steps